In the world of APIs and microservices, JSON is everywhere. It’s simple, human-readable, and works with virtually every programming language out there. But when you think about it, relying on a human-readable format for data exchange can seem a bit odd—especially when performance and data efficiency are what really matter. When you’re operating at scale, JSON can quickly turn into a bottleneck.

Here’s why JSON is considered data-heavy, and how binary RPC protocols like gRPC with Protobuf offer a faster, leaner alternative.

1. JSON is Verbose by Design

JSON is a text-based format that prioritizes readability over performance.

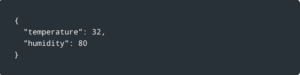

Example:

- Keys like “temperature” and “humidity” are full-length strings.

- Structural characters like {, :, “, and , add overhead.

In a typical JSON payload UTF-8 encoding (standard for JSON), each ASCII character is 1 byte.

- The string “temperature” takes 11 bytes.

- The string “humidity” takes 8 bytes.

- Structural characters like { , : , and ” add approximately 9 bytes.

- The numeric values 32 and 80 together take about 4 bytes.

- Total size of the JSON payload is roughly 32 bytes.

In Protobuf:

Protocol Buffers in the context of RPC (Remote Procedure Call) serves as both the Interface Definition Language (IDL) and the message serialization format for defining and implementing remote services.

It’s like a blueprint that defines what RPC methods and data structures look like across different programming languages, as well as the efficient binary format used to package and transmit that data over the network between the client and server.

In Protobuf, field names like “temperature” and “humidity” are not sent at all in the actual transmitted data.

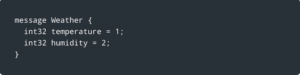

Here in the Protocol Buffer message definition (done in .proto file) You’re assigning each field a field number:

- temperature = 1

- humidity = 2

This Protobuf definition creates a Weather message type with two 32-bit integer fields for storing weather data.

However, Protocol Buffers use variable-length encoding, so the actual space used may be less than 32 bits per field.

- Small numbers (0-127) typically use just 1 byte

- Larger numbers use more bytes, up to the maximum of 5 bytes for int32

- Negative numbers always use the full 5 bytes (unless you use sint32)

Remember this is just a schema definition and is not sent over the wire each time.

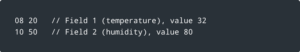

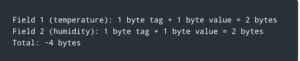

Protobuf Binary Payload (approximate):

Protocol Buffers (protobuf) Binary Encoding Breakdown :

Here the JSON data is compressed from 32 bytes to just 4 bytes using protobuf binary encoding, That’s an 87% reduction in size.

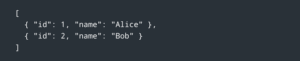

2. Repeated Field Names Add Bloat

Each object in a JSON array repeats the field names:

In binary formats like Protobuf, the schema is sent once and values are packed efficiently:

![]()

3. Text-Based Numbers and Booleans

JSON represents ‘numbers’ and ‘booleans’ as text:

![]()

- “true” is 4 bytes instead of 1.

- “123” is 3 bytes instead of 1–2 bytes in binary.

Binary protocols store these as native machine types compact and fast.

4. JSON Can’t Handle Raw Binary

JSON doesn’t support raw binary. To send an image or file, you must Base64 encode it:

![]()

- Base64 increases size by ~33%.

- Adds CPU cost for encoding/decoding.

Binary RPC sends bytes directly no conversion needed.

5. Higher Transport Overhead

JSON APIs usually use REST over HTTP/1.1:

- Extra HTTP headers

- No persistent connection (stateless)

RPC systems like gRPC:

- Use HTTP/2 or raw TCP

- Support persistent connections, streaming, and multiplexing

Real-World Use Case: Image Transfer

Sending a 5MB image:

- JSON (Base64-encoded) [Base64 size = (Original size × 4/3) + padding] → ~6.67MB

- gRPC (raw bytes) → 5MB

gRPC saves space and CPU cycles.

Conclusion

So here’s the bottom line: JSON is great when you need something readable and simple to work with. But if you’re dealing with high-performance systems, moving lots of data around, or need real-time communication, RPC with binary formats like Protobuf will give you significantly better speed and efficiency.

The numbers don’t lie – you’re looking at faster parsing, smaller payloads, and more efficient transport. Plus, the tooling has gotten much better over the years, so the barrier to entry isn’t as high as it used to be.

If you’re building modern services where performance actually matters, it might be time to seriously consider moving beyond JSON. The performance gains can be substantial, and your users will definitely notice the difference.

If you’re building modern services, it might be time to go beyond JSON.